1.FastAPI服务构建

首先需要在对应算法服务的虚拟环境venv或者 conda venv 中安装fastapi需要的python库,列举如下:

pip install fastapi

pip install uvicron

同样,根据算法服务需要编写一个简单的fastapi服务,实例如下:

# -*-coding: utf-8 -*-

# @Time : 2025/4/1 17:34

# @Author : TyranitarX

import argparse

import time

import mcubes

import numpy as np

import point_cloud_utils as pcu

import torch

import trimesh

from fastapi import FastAPI, File, UploadFile

from starlette.responses import FileResponse

from model.cuboidSegNet import CuboidSegNet

app = FastAPI()

N_vol = 2048 # volume points

N_near = 1024 # near-surface points

N_face = 2048

@app.post("/execute/", response_model=None)

async def create_item(

surfacefile: UploadFile = File(...) //算法输入

):

parser = argparse.ArgumentParser()

# 模型需要的超参数

parser.add_argument('--E_name', default='EXP_1', type=str, help='Experiment name')

# ...

args = parser.parse_args()

#读取模型

model = CuboidSegNet.load_from_checkpoint('../ckpts/newloss.ckpt', params=args, map_location='cuda')

#输入处理

#...

out = model(face.cuda(), vol.cuda())

#输出梳理

#...

filename = f"output_+{time.time()}.obj"

#保存文件

mcubes.export_obj(vertices, faces, filename)

#返回模型结果

return FileResponse(

filename,

media_type="application/octet-stream",

filename=filename

)

2. Dockerfile编写

有了以上内容便可以构建包含有算法服务的docker容器,核心便是编写Dockerfile进行容器构建。

- 在构建容器之前,我们需要把算法的虚拟环境打包应用在我们的容器中。这里以conda为例

# 安装conda-pack

conda install conda-pack

# 使用conda-pack打包当前环境

conda pack -n ENV_NAME -o FILENAME.tar.gz

执行成功后能得到打包好的环境压缩包

2) 之后便可以进行Dockerfile的编写,首先我们需要在算法环境目录下创建一个名为Dockerfile的文件,其内容如下:

# 使用 conda 官方镜像

FROM continuumio/miniconda3

# 设置工作目录

WORKDIR .

# 复制 Conda 环境文件(ENV_NAME 替换为你的环境名)

COPY ENV_NAME.tar.gz .

# 创建Conda 环境

RUN mkdir -p /opt/conda/envs/ENV_NAME && \

tar -xzf DEPF_ENV.tar.gz -C /opt/conda/envs/ENV_NAME && \

rm ENV_NAME.tar.gz

# 激活Conda 环境

RUN echo "source $(conda info --base)/etc/profile.d/conda.sh" >> ~/.bashrc && \

echo "conda activate ENV_NAME" >> ~/.bashrc

ENV PATH /opt/conda/envs/ENV_NAME/bin:$PATH

# 复制应用代码(需要改成你需要的目录)

COPY ./ckpts /ckpts

COPY ./model /model

COPY ./tools /tools

COPY fastServer.py .

# 暴露 FastAPI 端口

EXPOSE 8000

# 运行 FastAPI 这里设置的启动端口是容器内的端口,在运行容器时通过-p参数选择暴露在宿主机的哪一个端口上

CMD ["uvicorn", "fastServer:app", "--host", "0.0.0.0", "--port", "8000"]

之后再当前目录执行

docker build -t IMAGE_NAME .

# 之后会有如下上下文展示

[+] Building 65.0s (13/13) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 727B 0.0s

=> [internal] load metadata for docker.io/continuumio/miniconda3:latest 64.7s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 65B 0.0s

=> [1/9] FROM docker.io/continuumio/miniconda3:latest@sha256:4a2425c3ca891633e5a27280120f3fb6d5960a0f509b7594632cdd5bb8cbaea8 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 5.10kB 0.0s

=> CACHED [2/9] COPY DEPF_ENV.tar.gz . 0.0s

=> CACHED [3/9] RUN mkdir -p /opt/conda/envs/DEPF && tar -xzf DEPF_ENV.tar.gz -C /opt/conda/envs/DEPF && rm DEPF_ENV.tar 0.0s

=> CACHED [4/9] RUN echo "source $(conda info --base)/etc/profile.d/conda.sh" >> ~/.bashrc && echo "conda activate DEPF" >> 0.0s

=> CACHED [5/9] COPY ./ckpts /ckpts 0.0s

=> [6/9] COPY ./model /model 0.1s

=> [7/9] COPY ./tools /tools 0.0s

=> [8/9] COPY fastServer.py . 0.0s

=> exporting to image 0.2s

=> => exporting layers 0.2s

=> => writing image sha256:b01629a61370e51017e401980671bc2d83bec408abd2292fcef714c59d5c2537 0.0s

=> => naming to docker.io/library/algserver

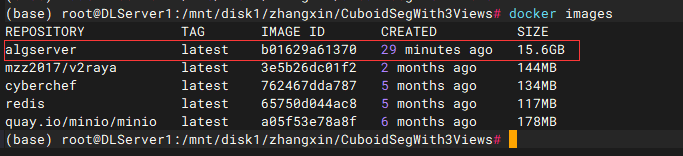

此时执行docker images即可看到生成的docker镜像

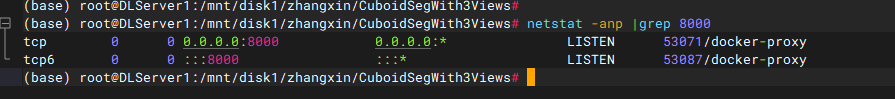

执行命令docker run -d -p 8000:8000 algserver 启动容器

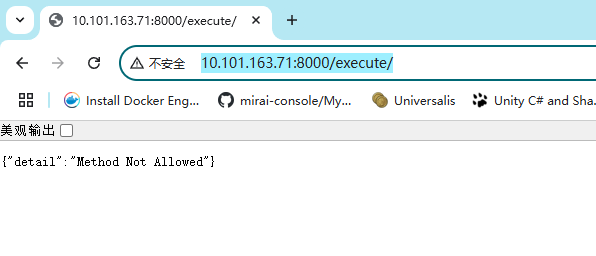

发现端口服务正常启动 接口正常访问

接口正常访问